Summary

- While the hype surrounding Generative AI continues, it is also driving automation forward. Multi-agent systems (MAS) combine large language models into an entire network of collaborating agents to enable task automation.

- Open source frameworks such as Crewai make setting up MAS and connecting them to APIs more user-friendly. However, key challenges such as stable performance and computational efficiency remain.

- To mitigate the impact of these problems, two solutions are proposed. One is methodological and recommends the increased use of well-defined tools and guardrails for agents. The second is of a technical nature and emphasizes the advantages of serverless cloud computing.

- The progressive networking of systems creates immense automation potential with MAS. But it also harbors risks such as increased resource consumption and non-transparent use of AI. Targeted use and controlled environments are therefore essential.

The current state of the GenAI hype

Generative artificial intelligence (GenAI) has made remarkable progress and promises to transform various industries through its ability to automate, create and improve. However, even with great advances, there are still limits to its practical application. Large language models (LLMs) for text processing have been called “stochastic parrots” because they are excellent at mimicking conversations and thought processes, but cannot truly understand them in a human way. Their effectiveness relies heavily on high quality input and human guidance. As GenAI becomes more widespread, organizations are shifting their focus from brainstorming about potential applications to critically evaluating its suitability and feasibility in specific use cases. Not every problem requires a state-of-the-art model; sometimes simpler tools work better. Employee training and continuous education remain an important factor in dealing with this complex issue.

Looking at the dynamics of the (Gen)AI market, it is still in its early stages and is heavily dependent on the market leaders. Companies such as Nvidia have benefited from massive investments in AI infrastructure. However, end-user demand from software vendors such as Microsoft is lagging behind as product integration and value proposition remain a challenge. Nonetheless, rapid progress and infrastructure improvements ensure that GenAI will become a cornerstone of automation and productivity, even if questions remain about efficiency and environmental impact. The promising cost efficiency of the newly released models from Chinese start-up DeepSeek continues to show that there is still a lot of potential for optimization.

Intelligence benefits from being networked. A next logical step to improve GenAI is therefore to build networks of collaborative agents instead of isolated models. Multi-agent systems (MAS), inspired by human collaboration, enable machines to share information, adapt and work together to solve complex, interconnected problems. These systems can redefine workflows and transform entire areas such as customer service, financial accounting and business intelligence. While this technology offers immense potential, thoughtful design, customization and innovation in multi-agent systems are key to unlocking their full value. The future is not just about smarter machines, but about intelligent collaboration between people, machines and entire networks.

Divide and conquer - automation with algorithms, robots and LLM multi-agents

The automation of manual tasks is one of the most important drivers of digitalization. Traditionally, it is based on fixed algorithmic programming. Computer-controlled processing instructions with strict patterns work well in stable environments, but have difficulties with variability or dynamic tasks. Robotic process automation (RPA) has been introduced to increase flexibility by mimicking human input and navigation. But again, this depends on rigorous implementation, stability and/or regular maintenance.

With the advent of machine learning (ML), automation became more adaptive as systems can learn patterns and dynamically adjust their behavior. Although this was a significant step forward, the effectiveness of ML is still largely limited to language-based and programmatic environments such as natural language processing and data analytics. Multimodularity, i.e. the ability to process multiple data types such as images and text in a single model, increases the potential of ML to navigate more complex environments. However, it has not yet reached the point where it is stable and explainable enough to move away from “rigid” automation. Roughly speaking, it is still easier to imitate what a human does with RPA than to imitate dynamic decision making and its various execution options with ML. Highly specialized work environments and the challenges of orchestrating multi-step workflows are still major hurdles. Overall, we humans are particularly effective at different tasks due to our flexible communication and coordination skills.

Given these limitations, it makes sense to focus on areas where ML-based automation already works well. Language-based and programmatic workflows are ideal for the use of specialized LLMs as workers designed for specific tasks. We will therefore focus on LLM-based multi-agent systems that are already in widespread use. These types of agents can communicate and collaborate with each other to manage more complex workflows. Broadly speaking, we divide workflows into specialties and pass them on to customized agents to make them work reliably. By enabling agents to communicate with each other to pass on data and tasks, we achieve mastery of complex processes in their entirety. Hence the title of this section: “Divide and conquer”.

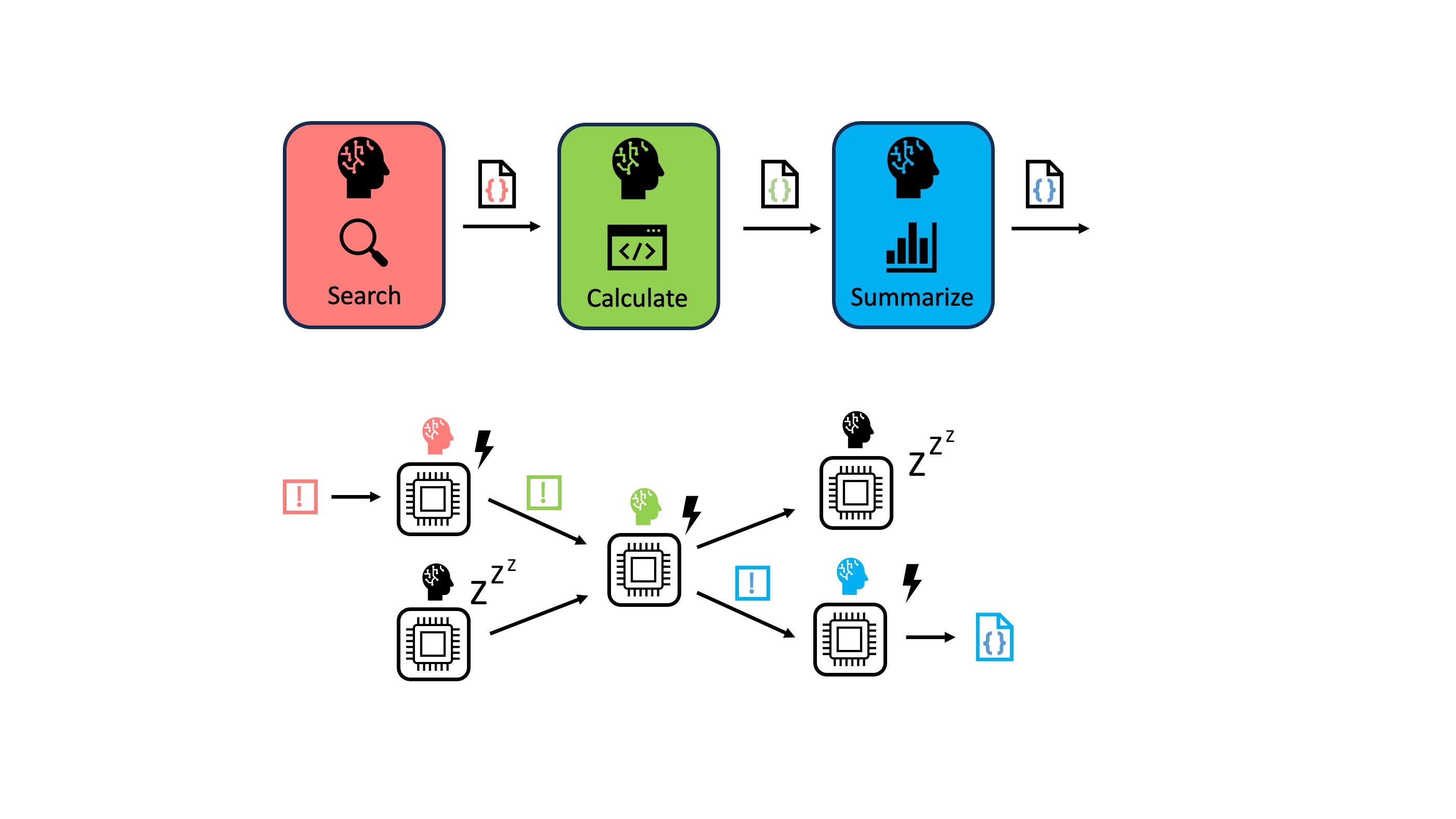

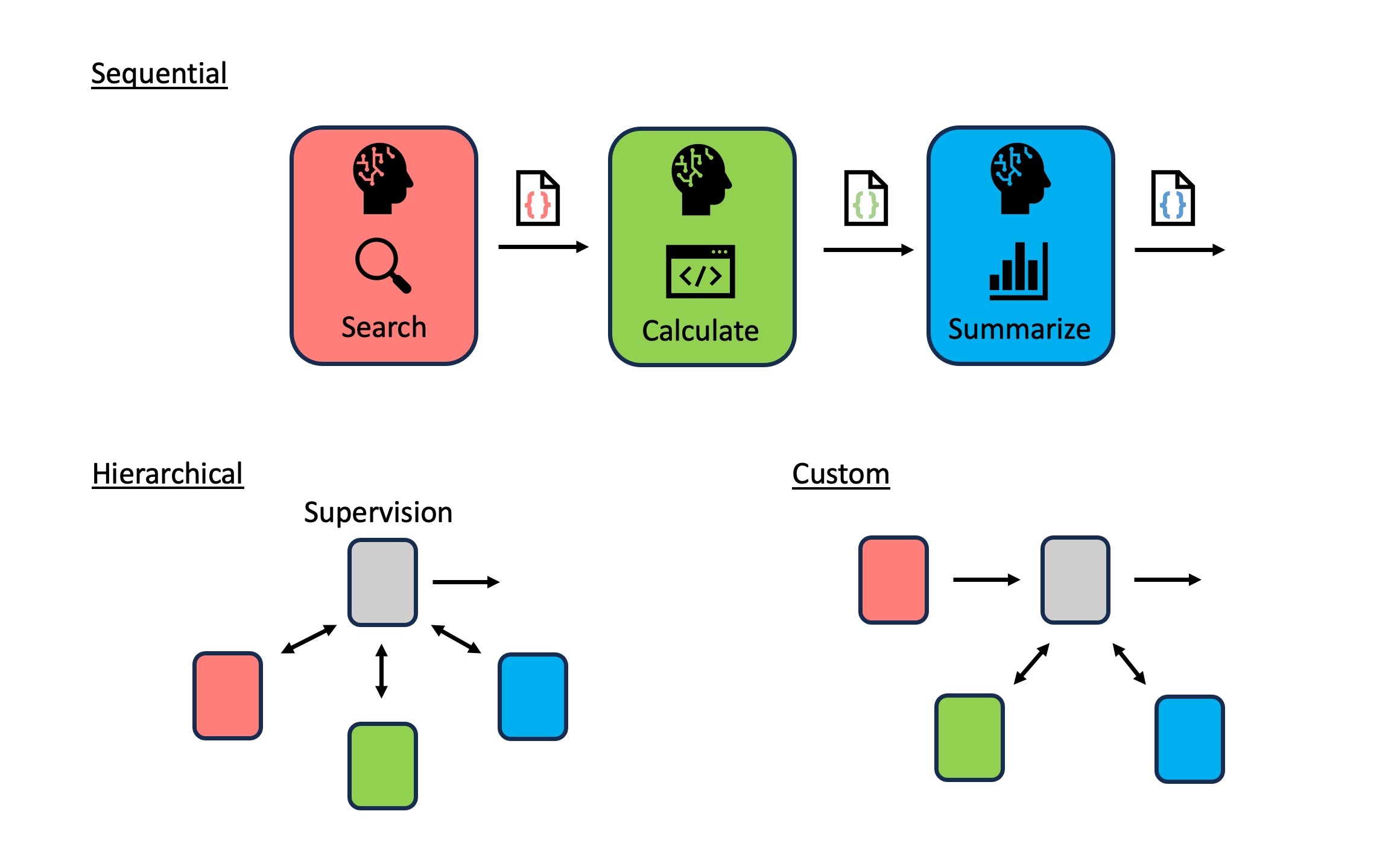

Coordination and communication within a multi-agent system is usually organized in two ways. Sequentially, i.e. tasks are completed step by step and the agents carry out the handover linearly. Or hierarchically, where manager agents at a higher level monitor and control the handover between smaller, specialized units. The more structure or roles we introduce into agent coordination, the more potential for scalability and adaptability is introduced. This in turn introduces new challenges, such as balancing the share of conversation, avoiding redundant or conflicting actions and maintaining overall system stability under unpredictable conditions. Sounds pretty human, doesn’t it?

After this introduction to multi-agent systems, it is time to take a look at the current possibilities for implementation. Industries that are already using this technology give us an insight into the areas in which this form of automation is already being used successfully.

Widely used solutions and their focus (groups)

Open source or proprietary LLMs are the essential building block of LLM multi-agent systems. These models can only work in the collaborative structures mentioned above if they are programmed with some orchestration capabilities surrounding them. With open-source LLM frameworks such as LangChain, it is possible to program customized solutions. In contrast, specialized MAS frameworks and platforms offer pre-built solutions and are becoming increasingly popular due to their ease of use. Many platforms have the same characteristics as Python-based open source code with a community-oriented approach. However, they differ in certain key aspects, which I will briefly highlight in the table below.

| Framework (w. Link) | Core Focus | Customization | Special Features | Ease of Use | Potential |

|---|---|---|---|---|---|

| AutoGen | Highly adapt LLM inference 1 | Extensive developer options and documentation | Docker-based and parallel execution | Steeper learning curve | Microsoft project with large starter community |

| CrewAI | Role-based agent design | Versatile through LangChain tool integration and documentation | Supports both open-source and proprietary LLMs, flexible task and tool management | Limited technical expertise sufficient | Valuable partners like IBM and fast growing community support |

| LangGraph | Graph-based agent interaction visualization | Versatile as it is LangChain’s own framework extension | Advanced cyclical workflows and memory management | Steeper learning curve and familiarity with graph structures required | Largest open-source community support |

| OpenAI Swarm | Lightweight multi-agent prototyping | Limited, not open-source, only public access | Educational focus, not recommended for production | Quick study for technical users | OpenAI project in early experimental stage |

| TaskWeaver | Code-first agents (language to executable code) | Plugin-centric for very individual code development | Precise control over task execution and intermediate data manipulation | Steeper learning curve | Microsoft project but low traction community |

A key function of these platforms is to provide agents with access to a variety of data sources and processing tools through the integration of well-developed tools and APIs. For example, agents with access to LangChain Tools can query Google, publish code on GitHub or even access proprietary tools such as PowerBI. This makes them particularly effective for tasks such as information retrieval, programming support and statistical analysis. These capabilities have made multi-agent systems popular in the consulting industry, whether in marketing, IT or finance. Such companies have the advantage of being able to combine sophisticated ML capabilities with established tools and are therefore pioneers in the application of MAS. Unsurprisingly, Crewai primarily lists the big four consulting firms as customers and claims that 40% of Fortune 500 companies use its framework.

Adaptability and interconnectivity are great, but also lead to trade-offs. The MAS of the above frameworks often rely heavily on robust model providers, reliable data sources and consistent libraries. This dependency makes them somewhat vulnerable, as disruptions to any of these components can impact functionality. While ensuring the stability of the infrastructure is critical, another layer of complexity arises from managing the behavior of the agents themselves. Intelligent agents, especially in dynamic environments, can exhibit unexpected or suboptimal behavior if they are not properly guided or controlled. This highlights the importance of robust mechanisms to control and monitor agent activity to maintain the integrity of the workflow and ensure consistent outcomes. As these platforms continue to evolve, it will be important to strike a balance between customization, stability and control to realize the full potential of agent-based automation. Let’s take a look at some of the methods we can use today to achieve this goal.

From sledgehammers to nutcrackers: give your agents the right tools!

When working with multi-agent systems, a common mistake is to give agents vague instructions to solve problems on their own. As powerful and adaptable as they may be at processing information, this can lead to undesirable results and wasteful self-correcting loops, if not deadlocks. A great metaphor is using a sledgehammer to crack a nut - an overpowering, inefficient approach to a specific problem that can also go badly wrong. Instead, it’s best to equip agents with concrete instructions and processing capabilities to balance their dynamic nature with simplicity and functionality.

Let’s take a look at the three basic components of a multi-agent system:

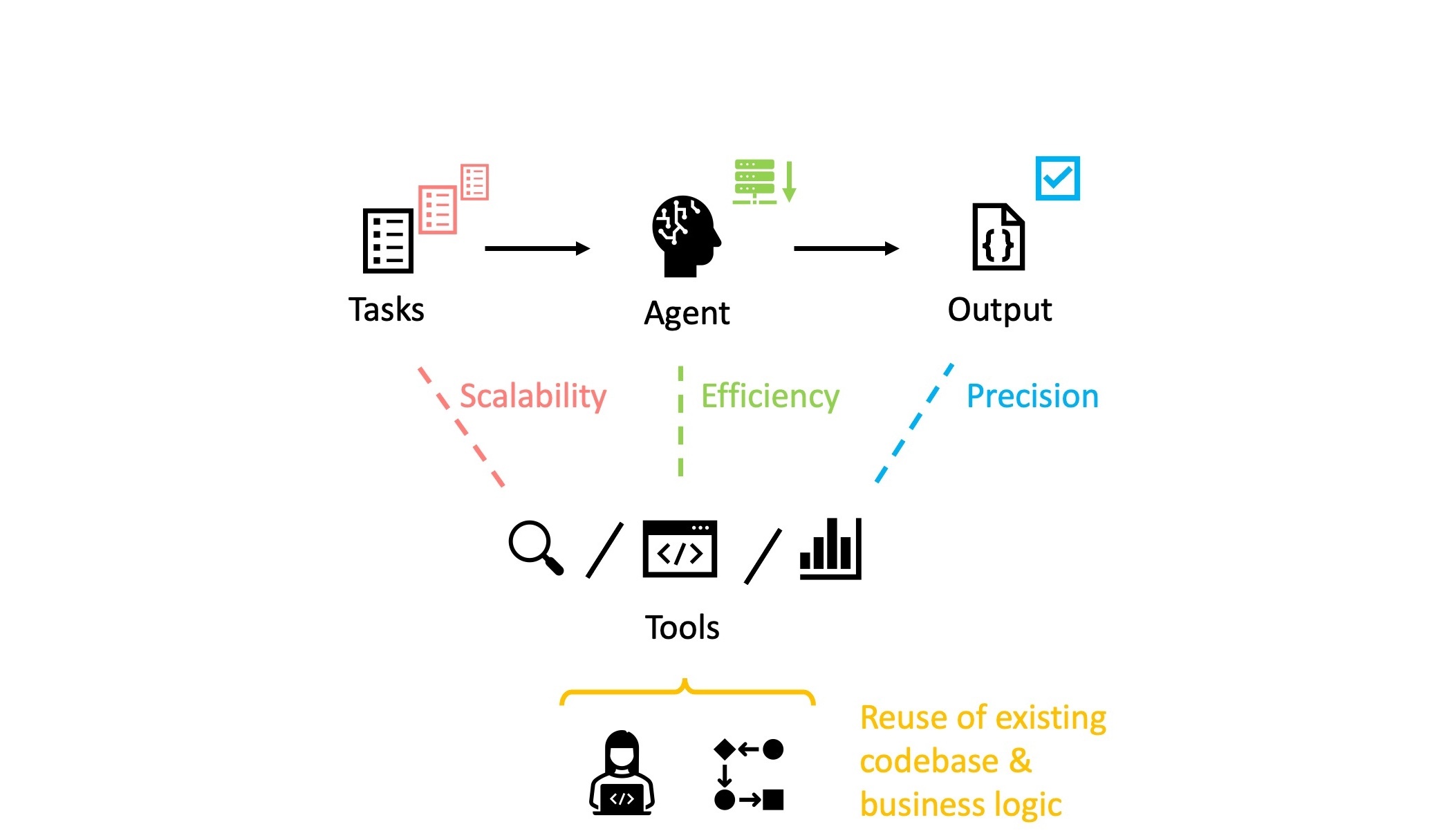

- Agents (also “roles”/“nodes”/“bots”): The autonomous entities tasked with solving specific problems. They can be asked to exhibit certain behaviors that guide them when interacting with tasks and tools. Example: “You are an expert in identifying missing data and are able to use web search tools to find suitable replacements if required for your task.”

- Tasks (also “workflows”/“conversations”): The goals or problems assigned to agents. A clear scope and conditional instructions help to control execution. Example: “Search the inventory database for average costs for the following material categories in 2024. If there is no historical data for 2024, you can use other sources to find industry averages.”

- Tools (also “functions”/“plugins”/“actions”/“APIs”/“capabilities”): Mechanisms or resources that agents use to accomplish their tasks. Usually defined as programmatic functions or interfaces. Example: Execution of the Serper API to perform a web search on platforms such as Google.

By equipping your agents with well-defined tools, you can significantly improve their performance in several ways. Scalability becomes a key benefit, as systems are able to handle more diverse and demanding tasks and workflows. Another benefit is efficiency, as the tools streamline operations and minimize agents’ computational load, allowing them to focus on their specific tasks without being overwhelmed by unnecessary complexity. In addition, precision is greatly improved as agents are empowered to produce consistent and accurate results, reducing errors and ensuring reliability across all tasks. By using tools to extend their capabilities, agents can complete tasks that would otherwise be beyond their scope, contributing to the overall robustness and adaptability of the system.

When developing tools, the use of existing code repositories can be a practical starting point. Many organizations have reusable components within their infrastructure that can be turned into specific tools for agents. Similarly, business logic should guide the development of tools that reflect real-world requirements and ensure that they accurately meet the specific needs of the tasks at hand. In addition, LLM chatbot agents themselves can be used to assist in the creation of tools. Either by creating templates or by improving and rewriting the existing code base.

And finally, remember that it’s not the tool that matters, but how you use it. Agents perform best when they are used as specialists rather than generalists. Similar to human experts, agents are characterized by the fact that they focus on a precisely defined area and use the tools provided to them with precision. At the same time, agents should not be reduced to mere triggers for entire tool chains. This approach limits their potential and does not fully exploit their ability to adapt and make intelligent decisions. By breaking down the processing logic into smaller, modular components, agents can be used as intelligent middleware that bridges gaps and effectively manages intermediate steps - think “divide and conquer”.

For those who want to take a more technical look at things, I’ve created a handy tutorial. There you will also find tips on how to introduce guardrails to create a good balance between agent creativity and control of results.

How can I set up a MAS in a labor- and cost-efficient way?

One advantage of LLM agents is that they can be technically equipped to interact directly with other IT systems. Many modern processes rely on applications that require the use of graphical user interfaces (GUIs) to access human input and generate output. With full automation, we can now bypass these requirements and their complex environments by having agents work at the technical API or command level. This not only speeds up the development process, but also simplifies integration into existing systems.

This can also pave the way for an API-first strategy (more in this context post). For example, agents can act as intermediaries that parse changing system inputs to meet formatting requirements. Agents that act as buffers in this way allow for more flexible communication between components. They can also interpret, map and transform inputs and outputs to ensure seamless interaction and minimize the need for costly and labor-intensive manual adjustments.

Long-term efficiency depends on reusability and reduced complexity. An important strategy is to design agents and tools as modular, shareable components. By encouraging developers to share reusable code and frameworks instead of developing a separate agent or tool for each problem, redundancies and the associated costs can be reduced. Minimizing communication between agents is another important practice. Excessive back and forth between agents can increase computational overhead and the potential for errors. When each component is optimized to do its job with minimal dependency on the others, the system becomes more efficient and scalable.

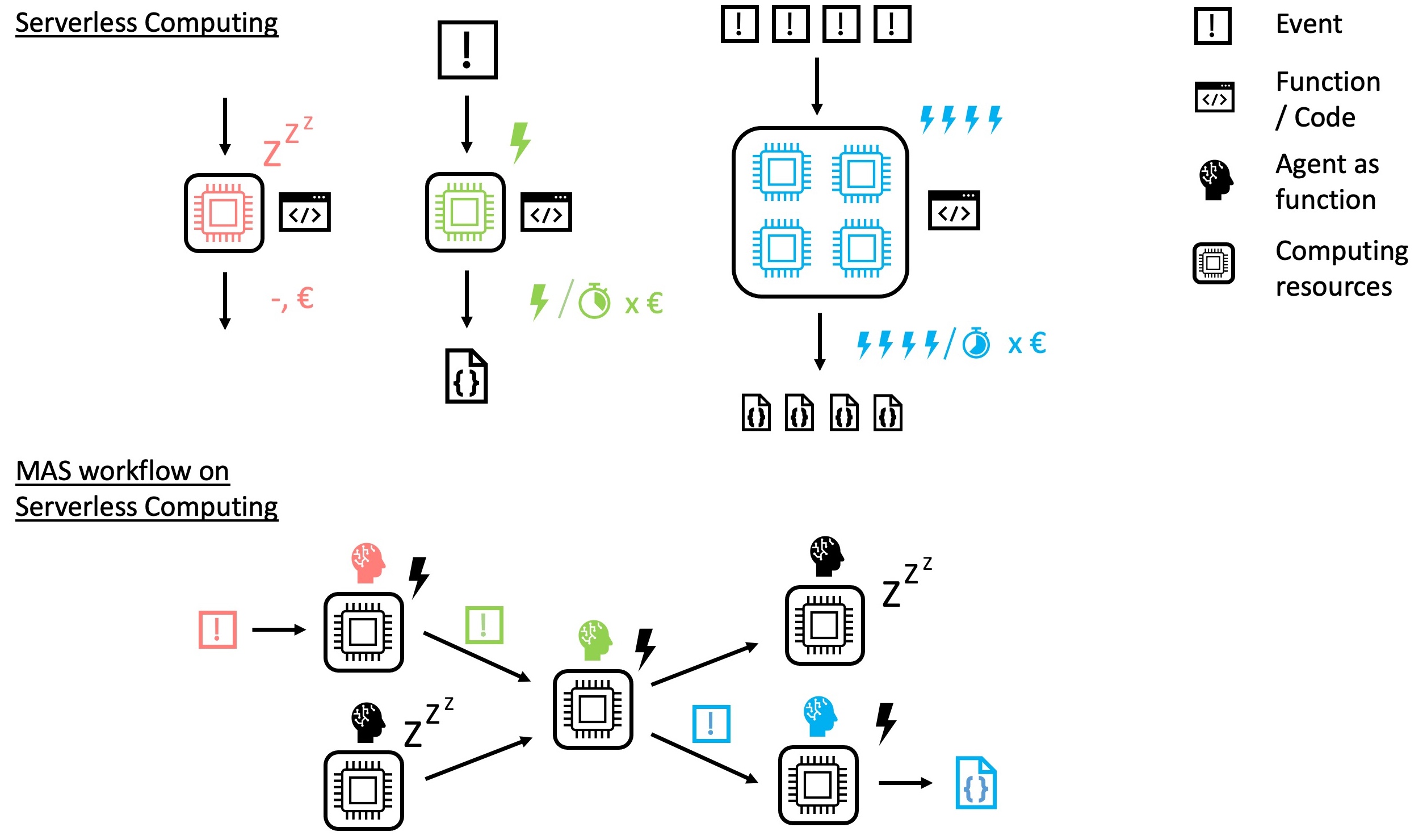

The scalability of multi-agent systems can be improved by using serverless computing power in the cloud. Serverless architecture eliminates the need to maintain a dedicated server infrastructure. It allows programs to run on demand without the overhead of provisioning, managing or scaling physical or virtual servers. Instead, computing resources are dynamically allocated by the cloud provider as soon as a user-defined trigger requires it.

This approach is perfect for the modular nature of multi-agent systems. In a serverless environment, agents, user-defined groups of agents and even shared tools can function independently of each other. They only perform tasks when they are needed and terminate afterwards. This modular execution model ensures that the system uses computing power efficiently and automatically adapts to workload requirements.

Regardless of whether a system needs to handle a few isolated tasks or thousands of simultaneous operations, the serverless architecture adapts seamlessly and provides resources according to current demand. Serverless providers only charge for actual usage, usually based on metrics such as the number of function calls and execution time. Limits can also be implemented for these metrics. For multi-agent systems, which often have fluctuating workloads depending on the scope and complexity of the tasks, serverless computing offers a financially suitable infrastructure.

By focusing on API accessibility, reusability and scalable cloud computing, one can build MAS that balance immediate efficiency gains with sustainable, long-term growth. These strategies reduces labor-intensive processes, minimize costs and ensures that systems can be adapted to evolving technologies and requirements.

Looking ahead: The potential and challenges of multi-agent systems

The current state of MAS adoption shows a growing usage but also significant challenges. A recent survey by Guo et al. (2024)2 emphasizes the increasing integration of LLMs, which has enabled substantial progress in areas like collaboration, decision-making, and task execution. These advances have made MAS particularly valuable in areas that require adaptability, such as customer service, content creation and dynamic task management.

The survey identifies key challenges in the adoption of LLM-driven multi-agent systems. Those include unpredictability in high-stakes tasks, computational inefficiencies, and latency issues, particularly when scaling across interconnected environments. To mitigate these obstacles, advancements such as self-reflective capabilities in LLMs are being introduced to enhance reliability and minimize errors. Leading model providers, such as OpenAI, have already implemented these reasoning techniques to improve response quality. This helps to reduce the need for extensive input and output validation mechanisms. Despite these advancements, clear task definitions, robust tool integration, and domain-specific customization remain critical for effective deployment. While the adoption of MAS continues to expand, achieving widespread industry integration will require ongoing architectural refinement.

The increasing networking of systems through APIs and the potential for communication between multiple MAS platforms offers immense opportunities. Connected agents could share tasks and resources more dynamically, enabling systems to tackle increasingly complex problems. However, this networking also brings challenges. The back and forth communication between agents can lead to significant time delays and increased consumption of computing resources. Compared to lean, algorithmic processing or clearly defined API operations, such constant communication is far less efficient and environmentally friendly. The potential impact on the environment highlights the need to think carefully about how MAS are deployed to balance their performance with the pursuit of efficiency.

A further complexity arising from this interconnectedness is the increasing difficulty in identifying where and how AI is being used. For example, when MAS components in neighboring systems provide or consume data, the use of AI can be obscured, making transparency efforts more difficult. This is particularly relevant in light of regulations such as the EU AI Act, which requires companies to clearly disclose their AI usage. The increasing interconnectedness of systems makes compliance more difficult, but also serves as a reminder of the importance of developing systems that are not only functional, but also transparent and accountable.

Despite these challenges, the evolution of MAS adoption is promising, provided we continue to focus on solving the right problems and maintaining flexibility in controlled environments. As the technology evolves, it will become increasingly important to align the application of MAS with clear goals and sustainable practices. If you want to explore the possibilities of multi-agent systems, you should start with controlled, practical applications. For starters, you can experiment with this guide to understand and implement your own MAS system.

1 ML inference: conclusions based on prior knowledge

2 Reference: Guo, T., Chen, X., Wang, Y., Chang, R., Pei, S., Chawla, N. V., Wiest, O., & Zhang, X. (2024). ‘Large Language Model based Multi-Agents: A Survey of Progress and Challenges’. arXiv preprint. Available at: https://arxiv.org/abs/2402.01680.